Introduction

High-quality clinical research provides essential data to direct care and improve patient outcomes in plastic and reconstructive surgery (PRS). It has the highest impact when the data is generated from similar or the same populations as the patients receiving evidence-based care. This strongly argues for the central role of high-impact clinical research in PRS practice. Unfortunately, high-quality research is uncommon in published PRS research (only 15.7% of published studies are level I–II),1 and often the research is from different populations to those in Australia and Aotearoa New Zealand (ANZ).

Research priority setting (RPS) seeks to identify future research topics that will best meet the needs of patient populations and can aid funding bodies in supporting research that will have the best clinical impact. These exercises produce an ordered list (that is, top 10 unanswered research questions in a topic or field) and can consider the differences in the incidence of specific conditions in different populations. For example, melanoma accounts for 11 per cent of new cancer diagnoses in Australia—almost triple that of the United Kingdom (UK).2,3 The exercises commonly align clinical needs with research needs but have not been applied to PRS in ANZ.4 Organisations, including the James Lind Alliance5 and the Cochrane Priority Setting Methods Group,6 have proposed priority setting guidelines, including the use of the Delphi technique. However, there may be variability in the methods used. Henderson and colleagues prioritised PRS research in the UK using a modified Delphi method—and called for the study to be replicated in other settings.7

A PRS research prioritisation exercise in ANZ should consider specific clinical needs. This study aims to inform the reader and guide the design of a future ANZ PRS exercise. The objective was to systematically review the use of the Delphi technique in PRS research prioritisation.

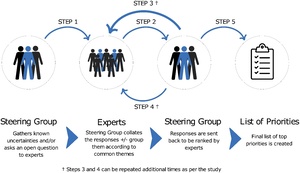

The Delphi process is a series of steps for ‘forecasting using the collective opinion of panel members’ to gain a consensus of expert opinions on a topic. 8,9 There are variants, most notably the ‘modified’ Delphi. However, the fundamental tenets remain the same—engaging experts, controlled feedback, anonymity, iteration by rounds and achieving a group response (Figure 1).10

First, a question is asked to a group of people with experience and interest in RPS in a given area. This group may include clinicians, patients and carers. Responses are then collated and the expert steering group identifies the most common themes or questions by grouping similar responses.11 The number of grouped responses can vary based on the quality and quantity of answers and is decided by the steering group. The grouped responses are redistributed to study participants, who rank responses based on importance. This can continue over multiple rounds until consensus is reached or the study may cease after a predefined number of rounds. Studies can be done in collaboration with established organisations that are influential in determining research funding and future research priorities in the field.

In this study, we describe the context of the Delphi method in RPS exercises and systematically review the characteristics and quality of published PRS studies. Primary outcomes included the number of studies and subject areas. Secondary outcomes were the type of Delphi method and study results (including journal impact factor, implementation plans and dissemination plans).

Methods

We performed a narrative systematic review of the methods, results and quality of Delphi studies used for RPS in PRS. For transparency, we followed PRISMA guidelines with a predefined protocol and shared our study documents on an open web repository (https://doi.org/10.17605/OSF.IO/7TGZ2).12 The study did not require ethics approval.

Search strategy and inclusion criteria

A research librarian (CH) designed a comprehensive search strategy to identify the use of the Delphi method in PRS research prioritisation, which was peer-reviewed by another librarian (RR) (see Supplementary material 1). The MEDLINE, EMBASE, Emcare and Web of Science databases were searched on 9 December 2022, following the PRISMA-S guidelines.13 The search was rerun on 20 December 2022. No search limits or filters were applied. A limited amount of citation searching was done. The search retrieved 550 citations and 426 remained after duplicate removal. A separate (undocumented) strategy was used to identify citations that discussed the Delphi method in general for context and background, which included grey literature from plastic surgery associations and societies. All authors contributed to this.

Our inclusion criteria were

- studies using a Delphi or modified Delphi method to determine RPS in PRS and its subspecialties.

Our exclusion criteria were

-

studies assessing RPS in areas other than PRS (and its subspecialties); or

-

priority setting studies using a methodology other than Delphi or modified Delphi; or

-

studies published in non-peer-reviewed journals or protocol studies; or

-

non-original research; or

-

non-English language articles; or

-

studies published prior to 9 December 1997 (25 years ago).

Data extraction and variables

Reviewers AB, GS and SG designed a data extraction template in Google Sheets (Google LLC, Mountain View, California). Each reviewer piloted with at least one Delphi study and iteratively improved the template until there was an agreement on variables (see Supplementary material 2).

Included studies were assessed for methodology, including geographical scale, modified versus standard method, the number of rounds and experts, types of experts and stakeholders, and their surgical speciality (with reference to the Royal Australasian College of Surgeons Plastic Surgery Curriculum).14

We defined studies using a ‘modified’ Delphi method based on the author’s declaration. Study results were assessed for inclusion of the numbers of uncertainties, priorities and responders at the end of each round, journal impact factor and the implementation and dissemination of plans.

Assessment of bias

We performed a quality assessment on the four domains of quality identified by Diamond and colleagues.15 These were defined stopping criteria, a specified planned number of rounds, reproducible criteria for selecting participants, and criteria for dropping items at each round. These were simple and took account of Delphi variants. Studies were scored 1 on the presence or 0 on the absence of a domain with an overall score of 0 to 2 (high bias), 3 (moderate bias), and 4 (low bias). We used the risk-of-bias visualisation (robvis) tool to represent bias graphically.16

Study selection and data collection

The Covidence systematic review software (Veritas Health Innovation, Melbourne, Australia) was used for screening abstracts, titles and full texts, with data extraction and quality assessment.17 Reviewers independently performed each task in duplicate, with a third reviewer arbitrating and discussing where conflicts arose.

Synthesis and statistical analysis

Variables were synthesised in a summary table with a graphical overview of bias. Descriptive statistics were calculated using R Statistical Software (v4.2.2; R Core Team 2021). Interobserver reliability was assessed using Cohen’s Kappa and the proportion of agreement during screening.

Results

Included studies

The search strategy identified 550 studies, of which 418 were excluded after abstract and title screening, and one was excluded after the full-text screening.18 The process is summarised in Figure 2. A summary of the seven included studies is shown in Table 1, with complete data in Supplementary material 2. Agreement between reviewers during screening (Cohen’s Kappa) ranged from 0.51 (moderate) to 1 (perfect) (Table 2). Two studies involved breast reconstruction,19,20 while the remainder covered aesthetics,21 craniofacial,11 burns,22 general plastics,7 and wound and tissue repair.23 Funding was received by four out of seven studies.11,19,22,23

Included studies’ methods

Out of the seven studies, four used the modified Delphi method7,11,20,21 and five were on a national scale.7,11,20–22 The median number of survey rounds was three. There was heterogeneity of method among all included studies. Weber and colleagues was based on a comprehensive protocol.19 Priorities were gathered with an international, electronic Delphi survey over two rounds before gaining expert consensus in person at a conference. Sadideen and colleagues evaluated research priorities for improving the ‘Brazilian butt lift’ safety over two electronic rounds.21 Kanapathy and colleagues followed the James Lind Alliance method, gathering uncertainties and finishing with a three-round Delphi.20 Henderson and colleagues gathered information from consultant stakeholders in the UK’s national plastic surgery organisation (British Association of Plastic, Reconstructive and Aesthetic Surgeons) with a free web survey ranked by an expert group and a stakeholder group.7 Hamlet and colleagues used a mixed in-person and electronic three-round Delphi with Qualtrics software (Qualtrics, Provo, UT) to ascertain facial palsy priorities.11 Gibran and colleagues used the professional Delphi SurveyLet software (Calibrum Inc, Utah) to provide controlled feedback over four rounds.22 Cowman and colleagues used free web survey software over four rounds to prioritise research and education priorities in wound and tissue repair.23 Only two studies reported using a predefined protocol.19,22

Included studies’ results

The initial median number of research priorities was 127 (range 11–2764). After completion of the Delphi rounds, the median number of final priorities was 15 (range 3–36). Studies tended to have more participants in the first round (median 85), compared with the final round (median 63), because of non-responders and the refinement of groups. Dissemination plans invariably involved publication in peer-reviewed journals, which occurred in journals with a median impact factor of 3.022 (range 3.022–54.433). None of the included articles were published as open access. Implementation plans were variable when present but were absent in most studies.11,20,21,23

Bias in included studies

Most of the included studies had a high or moderate risk of bias. The criteria for the planned rounds were featured in all the studies included. Criteria for dropping or merging items were least often reported, followed by stopping criteria (Figure 3).

Discussion

We systematically reviewed the methods, results and risk of bias of seven studies using the Delphi method for PRS research prioritisation. Study subspecialty areas included breast, burns, aesthetics, craniofacial and general plastics. There was heterogeneity of methods, which allowed adaptation to the authors’ needs, experience and resources, but also made a direct comparison between studies more difficult. The dissemination plan was in subscription, peer-reviewed journals for all included studies. Studies had few documented implementation plans, and the risk of bias was predominantly high.

Diamond and colleagues15 developed four simple and generalisable criteria for Delphi studies, implemented by Banno and colleagues.24 Diamond and colleagues recommended that the criteria for stopping and dropping or merging items at each round were quality markers and should be declared beforehand. Most included studies did not achieve this standard. While the criteria were not markers of absolute quality, the accompanying protocols would be hallmarks of good research by encouraging reproducibility. Junger and colleagues developed the CREDES reporting guidelines for Delphi studies in palliative care, which, while informative, may need to be more generalisable to the surgical context.25 Development of more generalised consensus guidelines–Accurate Consensus Reporting Document (ACCORD) through the Enhancing the Quality and Transparency of Health Research (EQUATOR) network–may facilitate quality assessment.26,27 This was not published at the time of writing.

Within the included studies, the majority had a high or moderate risk of bias. Included studies also had significant differences in methods. While this allows the adaptation of studies to the authors’ experience and resources, it makes a direct comparison between studies difficult. It also makes the argument for resource allocation based on Delphi studies less convincing. Variable methodology and high risk of bias highlight the need for standardised, reproducible guideline development surrounding the creation and reporting of Delphi studies.

None of the included studies considered research priorities in ANZ, and it has been documented that research priorities are likely contextual and vary depending on the healthcare system.28 As such, it highlights the need for robust regional RPS exercises to ensure that differences in clinical needs are aligned with research priorities. Lee and colleagues identified the need for clear implementation plans and improved multidisciplinary stakeholder involvement, findings that are reinforced by our systematic review.7

Limitations of this study include our assessment of study quality in the absence of a validated instrument. However, we did use the guidelines developed by Diamond and colleagues, and considered components of the CREDES Delphi reporting guidelines within our data extraction table.15,25 Additionally, although we recorded study funding as a yes/no variable, further analysis of the level of funding would have been informative to assess if there is a correlation between the study’s funding level and its quality and impact. This review did not include searching literature in the James Lind Alliance database. As such, some relevant studies may have been excluded from this review.

Strengths of this study include the participation of a senior librarian for development and support with search strategy, in which four databases were searched with a peer-reviewed search strategy. Our data extraction and quality assessment tools were developed iteratively between authors. Additionally, all screening and data extraction was performed independently and in duplicate by at least two authors, with impartial arbitration of conflicts by a third author. This systematic review had a predefined protocol, which was developed following the PRISMA guidelines, with study documents available online for transparency.11

Conclusions

Delphi-based research prioritisation exercises have been used to prioritise research in PRS and its subspecialties. Henderson and colleagues addressed RPS for plastic surgery but excluded hand surgery due to a similar concurrent hand surgery prioritisation study.7 Methodology among included studies was highly variable, making it difficult to draw direct comparisons between studies. Of the studies, six out of seven included a moderate or high risk of bias, with only Hamlet and colleagues addressing criteria for dropping items at each Delphi round.11 Although all included studies were published in peer-reviewed journals, implementation plans for how the studies would impact future research could have been more specific and present. Future Delphi RPS exercises should focus on incorporating a more standardised Delphi methodology and consider explicitly addressing the quality assessment criteria first discussed by Diamond and colleagues.15

Acknowledgements

The Australasian Clinical Trials in Plastic, Reconstructive and Aesthetic Surgery (ACTPRAS) collaboration is developing the Plastic Surgery Research Priority Setting (PRESET) study in ANZ, based on the analysis presented here. Expressions of interest from doctors, nurses, allied health practitioners, patients, and carers involved in plastic surgery and its subspecialties are invited through the ACTPRAS website (actpras.com).

We would like to thank James Henderson, Adam Reid and Abhilash Jain for sharing their method; Alice Lee for information on RPS partnerships; Rina Rukmini (RR) for reviewing the search strategy; Cody Frear for his assistance with Covidence and reviewing the manuscript; and Emma Gregory for reviewing the manuscript.

Conflicts of interest

The authors have no conflicts of interest to disclose.

Funding support

The authors received no financial support for the research, authorship, and/or publication of this article.